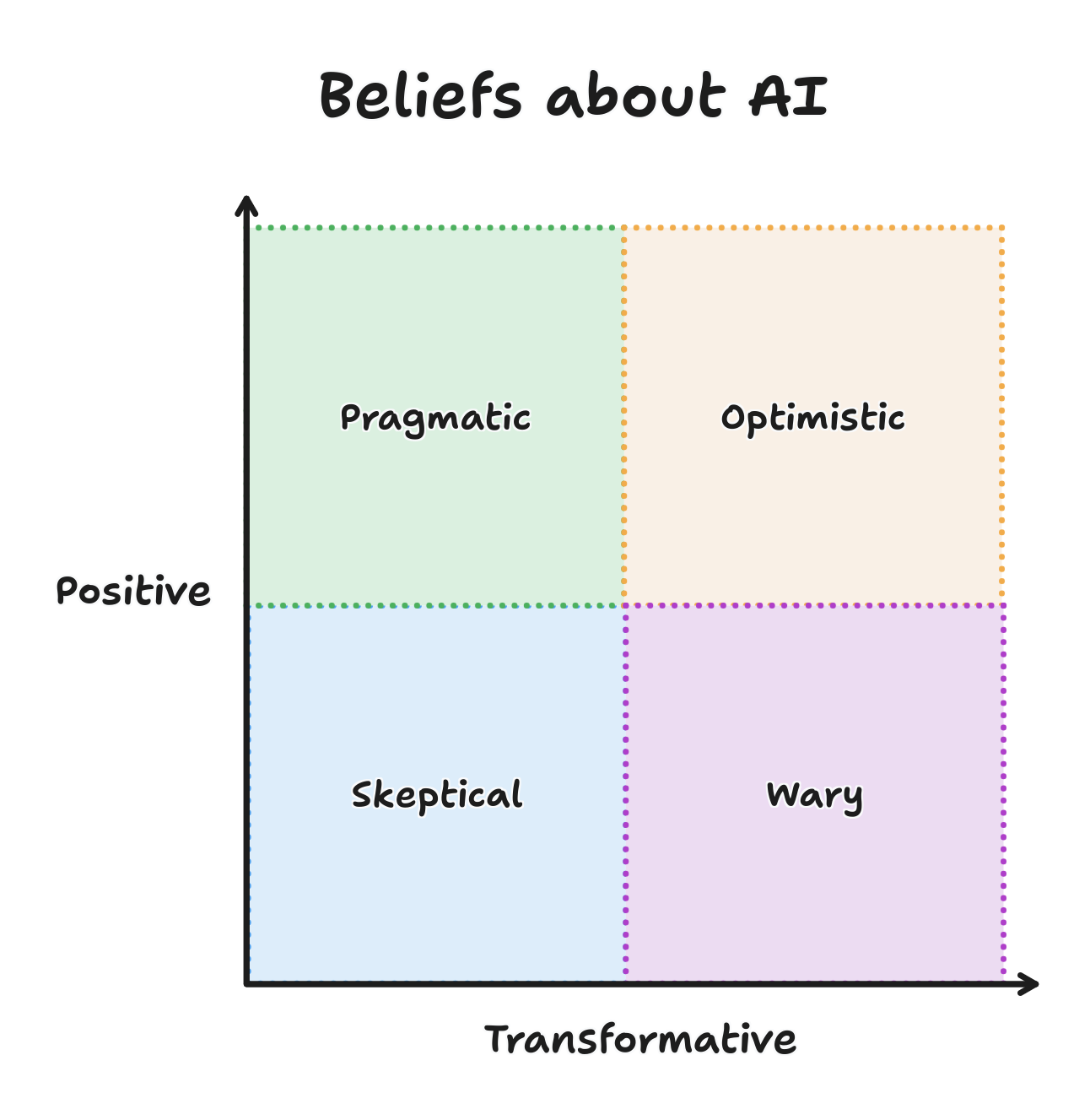

Four perspectives on AI

Maxi Ferreira writes in Fear and Curiosity and AI about the two stages of AI adoption among developers:

A Stage 1 reaction looks something like this: “Ha! Look at this dumb mistake the AI made. It thought I was using Tailwind when I’m actually using Style Components. More like Artificial Unintelligence, am I right? Good luck taking my job, bots.”

A Stage 2 reaction looks more like this: “Hmm, I wonder why the AI got confused there. Maybe some of my classes in this component look like Tailwind classes. How can I prevent this from happening in the future? Let me add a Cursor Rule to tell it I’m actually using Styled Components in this project.”

The naming suggestions a progression. Developers start at the defensive/fearful Stage 1 but are able to progress to Stage 2 with a "curiosity mindset".

I agree with Ferreira about the two reactions. I feel like I've heard them both near verbatim while talking about AI with colleagues. And I agree that curiosity and a growth mindset are invaluable in our field and life broadly. But the framing of "Stage 1/Stage 2" and the motivation behind them is uncharitable (at best) and unhelpful (at worst).[1]

The difference between the two reactions depends not on time and mindset but on an individual's belief of AI's transformative potential and positive impact.

The Skeptical see AI's capabilities and limitations. They question the imminent advent of AGI and whether future frontier models will have a significant impact on the world in the long-term. They don't see the technological developments of AI as a net positive. They'll use phrases like "over-hyped" and "flash in the pan". They are unlikely to experiment with AI tools. When they see the output of any-given model, they tend to have Reaction 1 because criticism illustrates their beliefs.

The Wary also see AI's capabilities and limitations but believe that developments in the field will result in dramatic changes to our work and lives. They are concerned that the advancement of this technology will come at severe cost to other aspects of our world. They'll have a "p(doom)" number, raise concerns about safety, and cite environmental or societal detriments of AI. They may have Reaction 1 but are more likely to avoid AI entirely and encourage others to do the same.

The Pragmatic aren't swayed by arguments for or projections of AGI. They see AI as a tool to help them with tasks today. They'll experiment with prompts and models but will quickly move on when it isn't working. They'll mention "usefulness", "utility", and "productivity". They spend little time thinking about AI beyond a specific problem and solution. They may have Reaction 2, though there will be a reasonable time box for how long they explore.

The Optimistic see the potential in AI's capabilities and trust that the limitations will eventually be overcome. They believe that future models will radically transform our world for the better citing problems to be solved in medicine and the environment. They'll encourage others to learn how to use AI so that they "don't get left behind". They tend to have Reaction 2 and will push the boundaries of what is capable with the current state of AI.

I've grouped these four perspectives, but everything here is a spectrum. Depending on the context or day, you might find yourself at any point on the graph. And I've attempted to describe each perspectively generously, because I don't believe that any are inherently good or bad. I find myself switching between perspectives throughout the day as I implement features, use tools, and read articles. A good team is probably made of members from all perspectives.

Which perspective resonates with you today? Do you also find yourself moving around the graph? I would love to hear your thoughts, as I'm sure mine will continue to develop over time.

Despite my disagreement with Ferreira, I still recommend his newsletter. It has helpful insights and interesting links that I'm happy to see in my inbox. ↩︎